Spoiler alert: Yes, you can! And no cheating is involved. Read how we uploaded a large training dataset from Spacenet 2 to Picterra, trained a model, and… beat the winners of machine learning competitions in no time!

SpaceNet competitions started already four years ago with the intention to open up Earth observation very high-resolution datasets, usually inaccessible to researchers, to the broader world and in particular the Machine Learning & Computer Vision community. The idea was to get ML expertise diffused into the Remote Sensing & Earth observation community and made openly available for stronger democratization of deriving analytics from EO imagery.

This went very successfully with the first two SpaceNet competitions focusing on Building detection and footprints, then the third one and fifth on road networks and travel time estimation. The fourth one was quite specific to the problem of VHR imagery tasking off-nadir and producing strong perspective distortions on the images. Finally, for the first time, the sixth challenge went into the SAR world with building detection using SAR imagery. This year it is now the seventh challenge which brings in the time component with time series from Planetscope satellites for monitoring urban development:

- Building detection v1 (Optical imagery)

- Building detection v2 (Optical imagery)

- Road Network Detection (Optical imagery)

- Off-nadir-building-detection (Optical imagery off-nadir)

- Automated Road Network Extraction and Route Travel Time Estimation from Satellite Imagery (Optical imagery)

- Multi-Sensor All-Weather Mapping (SAR and Optical imagery)

- SpaceNet 7 Challenge: Multi-Temporal Urban Development (Planetscope optical images)

Competitions with large training datasets are a way to foster innovation, but the risk is that these great winning solutions die in their GitHub repository and get forgotten as they were not built on top of a specific community of developers. CosmiQ Works have been over the years bringing more and more baselines algorithms and did a great job to cope with a certain inconsistency over time.

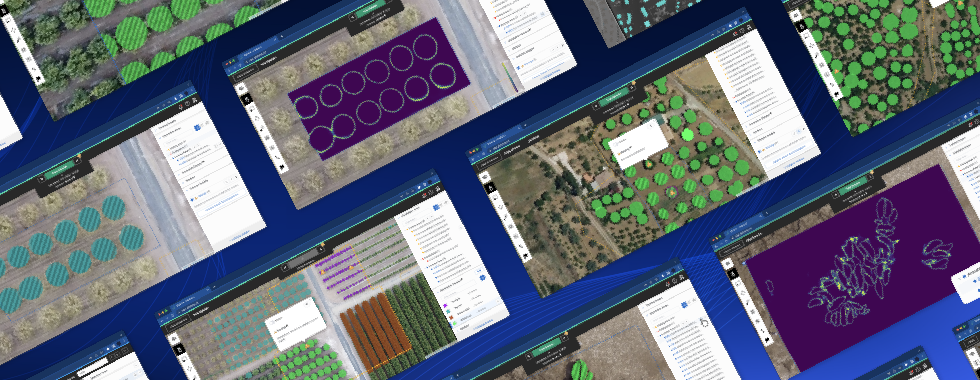

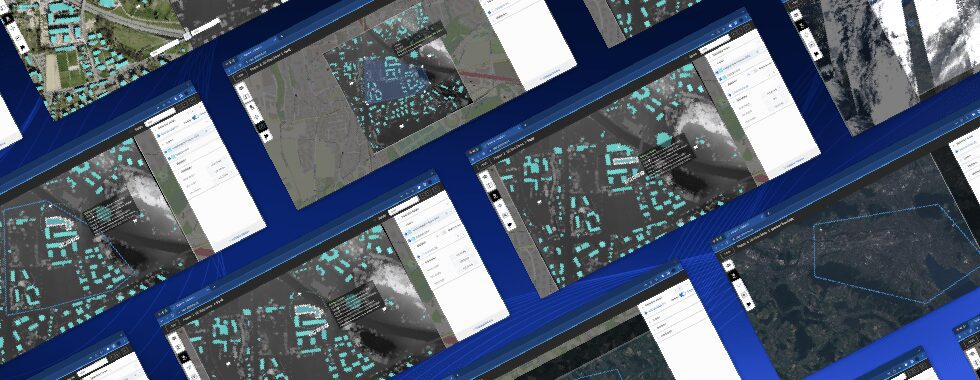

At Picterra, we contribute to this democratization of Machine Learning for Earth Observation by making it much more accessible, beyond the Machine Learning community. This involves effort in multiple directions: making satellite data easily accessible, making sure we have an intuitive and user-friendly user interface, and also ensuring that our Machine Learning models are top-notch.

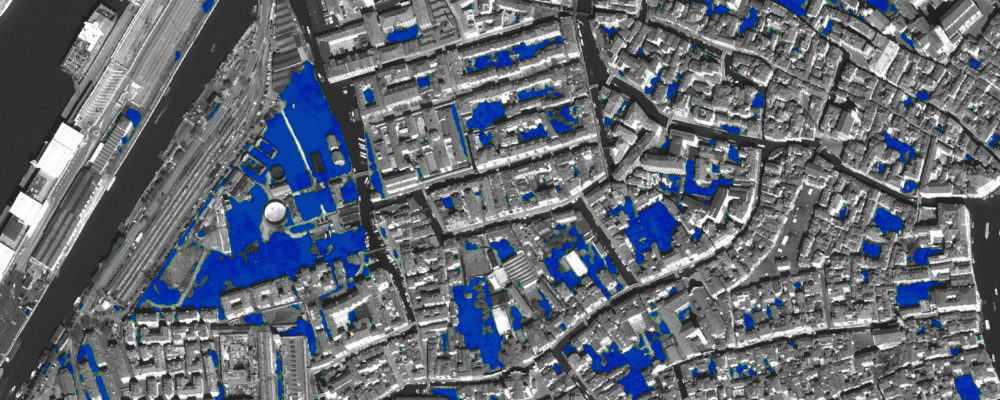

It is of course extremely tempting to evaluate the quality of our Machine Learning model by using the Spacenet 2 dataset, about detecting buildings, which is one of the largest Earth observation dataset made available for that task: SpaceNet 2 consists of 221331 annotations (33924 for testing) spread over 10593 images (1587 for testing). The competition winners brought together a quite complex solution with an ensemble of 3 models built on a different set of “augmented” datasets (downsampled, original imagery, and OpenStreetMap).

How to beat the winners of machine learning competitions? Training a SpaceNet model on Picterra...

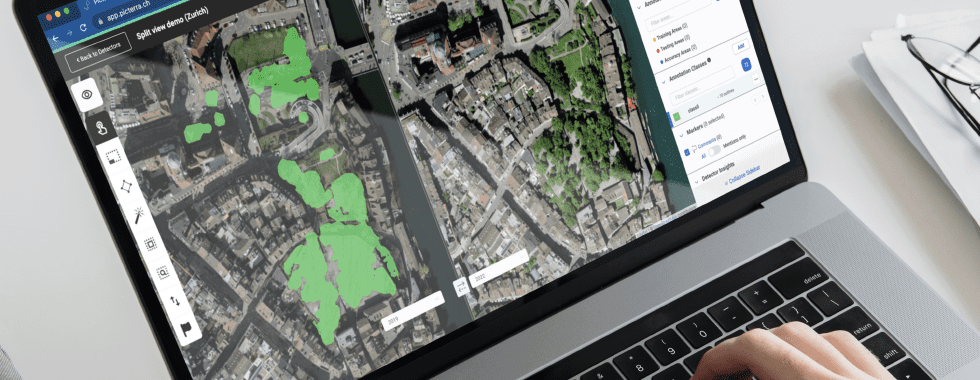

In order to import the large amount of training data contained in SpaceNet, we used the Picterra Python client library which provides an API to import massive datasets in Picterra.

Once the training data has been uploaded to a new Picterra Custom detector, it’s time to train it. Since we are dealing with a quite large dataset for this detector, we’ll take advantage of the training time advanced ML setting. When you have very large datasets like SpaceNet, it makes sense to push this to some high value. Here are some rough guidelines on how you can push training time:

The graph below shows how the validation f-score over SpaceNet evolved with training time. The f-score combines both the precision (“How correct is the model when it detects something ?”) and the recall (“From all the things it should detect, how many did the model actually detect ?”) into a single score that is always between 0 (bad) and 1 (good). You can see that even at 40’000 steps, it continues to go up, so we could even improve the model further than what we did in this initial test.

… and getting a virtual first place!

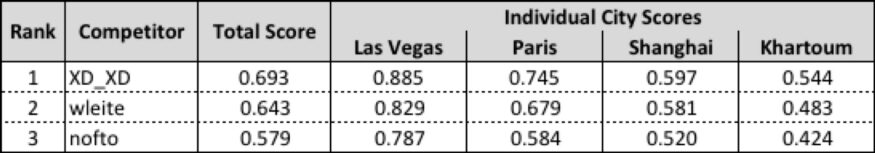

After we’ve trained the model on Picterra, we used the API to run the model on the Spacenet validation set and get a final score that we could compare to the official SpaceNet leaderboard. Note that since the ground truth for the final SpaceNet test set is not public, we can only score on a random test set made on the different cities of the challenge. Therefore, the comparison is not 100% equivalent, but it is the best we can do.

Source: https://medium.com/the-downlinq/2nd-spacenet-competition-winners-code-release-c7473eea7c11

And well, with our final score of 0.722, we would actually have gotten the first place! This is quite an achievement given that this requires zero Machine Learning knowledge and didn’t require us to tweak our model for this particular task. So, this is how we beat the winners of machine learning competitions!

Conclusion

If you are in a situation where you have a large amount of instance segmentation/object detection / semantic segmentation data and want to quickly train a model on it, please get in touch with us. We would be happy to guide you through how you can use our API and platform to replicate what we did here.

This experiment shows that Picterra can scale from very few annotations (e.g. 56 for country-scale detections) to a large number of annotations (here more than 200’000!). This is because under-the-hood, our Machine Learning model is automatically adapting to the particularities of your datasets and we put a lot of effort into making the right decisions when training with things like specific sampling strategies, multi-objective losses, and hyperparameters tuning.

Authors: